Using Electronic Assessments to Support the Practical and Conceptual Learning of Data Skills

Conference

65th ISI World Statistics Congress

Format: IPS Abstract - WSC 2025

Keywords: assessments, electronic, online learning

Session: IPS 898 - Electronic Assessment to Master Data Skills

Tuesday 7 October 2 p.m. - 3:40 p.m. (Europe/Amsterdam)

Abstract

Formative assessments can evaluate students' understanding of course material. This is by enabling teachers to monitor progress, and identify areas that need attention to inform course planning. Students, meanwhile, can assess their own understanding, identify areas for improvement, and gain practical experience.

Electronic assessments enhance formative assessment by providing immediate feedback, which increases student engagement (Blondeel, Everaert, and Opdecam, 2022), and has a strong influence on student development in course retention (Einig, 2013). Furthermore, electronic assessments eliminate unconscious bias through anonymous marking and provide insights in student progress for educators (Collison, 2021). These tools also can support mastery by allowing repeated attempts, which enhances long-term retention and improves understanding (Kulik, Kulik, & Bangert-Drowns, 1990).

Electronic assessments support both conceptual and practical skills. For example, at the African Institute of the Mathematical Sciences (AIMS), we gave students a histogram and asked them to estimate the standard deviation to build intuition and deeper conceptual understanding beyond merely performing calculations.

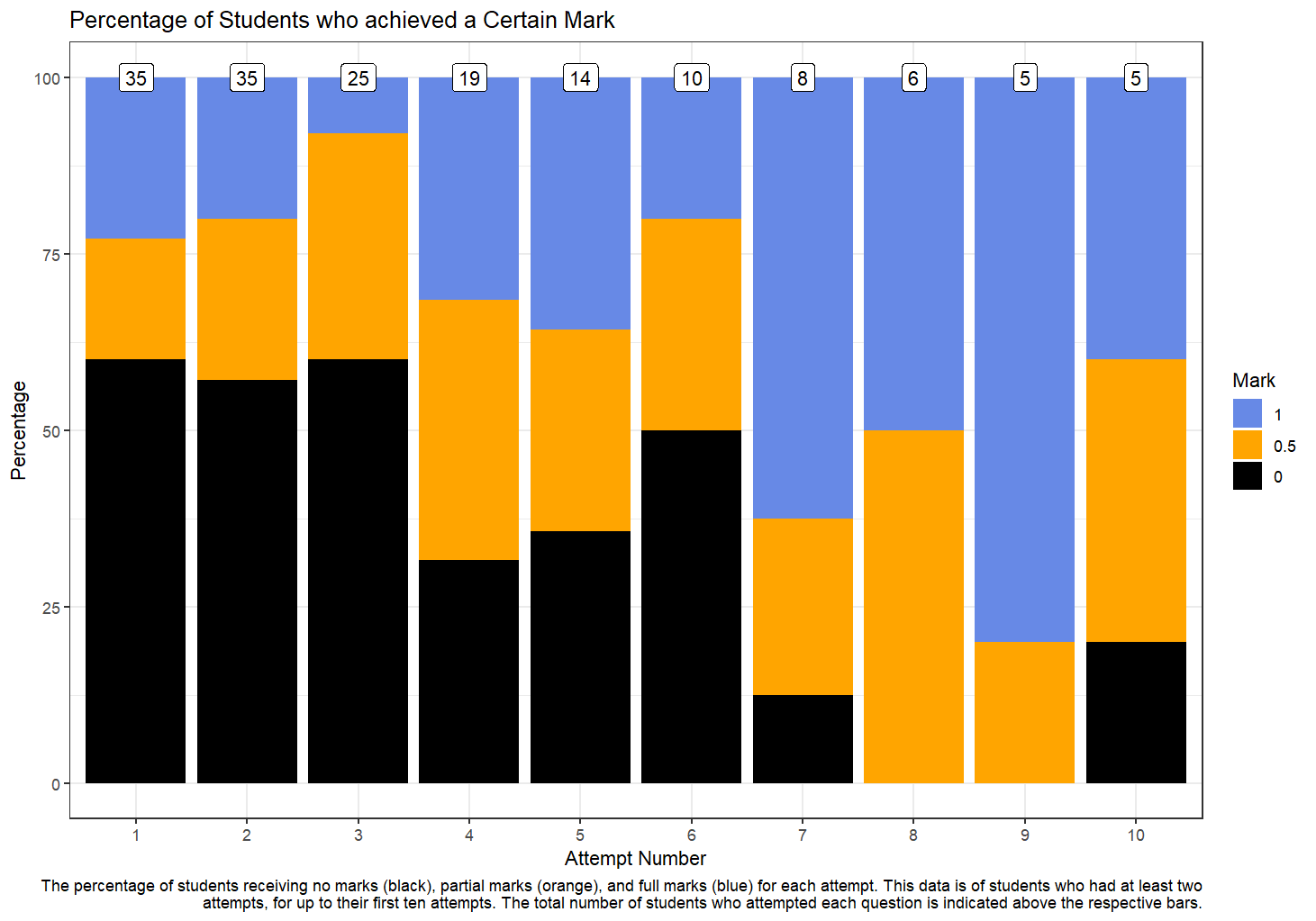

Of the 60 students, 50 completed a practise version of the question, and 35 made two or more attempts. Of these, 60% (21/35) scored 0 marks on their first attempt, but this decreased with subsequent attempts. Conversely, only 22.9% achieved full marks on the first attempt, but this improved over time.

Electronic assessments also help develop practical data skills. We used electronic assessments to incorporate intentional mistakes or misconceptions within the data, prompting students to confront and correct these issues as part of their learning.

In one task, students were given two rainfall data sets to append. They were then asked to determine which year had the least rainfall, which region had the most, and the total rainfall in millimetres. Several common mistakes were embedded in the task; for example, one data set used millimetres for rainfall measurements, while the other used centimetres.

Of the nine students who attempted this task, seven attempted the task more than once. On their first attempt, all students scored 0 marks. However, by the second attempt, 42.9% (3/7) achieved full marks, increasing to 60% (3/5) by the third attempt and 100% (3/3) by the fourth. Although the sample size is small, it helps illustrate how iterative electronic assessments can effectively support students in identifying and correcting their errors, thereby enhancing their practical data analysis skills.

Overall, the use of electronic assessments provides an adaptive and responsive way to improve learning outcomes, giving students the space to develop both conceptual and practical data skills at their own pace.

References

Blondeel, E., Everaert, P. and Opdecam, E., 2022. Stimulating higher education students to use online formative assessments: the case of two mid-term take-home tests. Assessment & Evaluation in Higher Education, 47(2), pp.297-312.

Collison, P., 2021. Using digital assessment tools for formative assessment. 10 February. Assessment Blog.

Einig, S., 2013. Supporting students' learning: The use of formative online assessments. Accounting Education, 22(5), pp.425-444.

Kulik, C.L.C., Kulik, J.A. and Bangert-Drowns, R.L., 1990. Effectiveness of mastery learning programs: A meta-analysis. Review of educational research, 60(2), pp.265-299.

Figures/Tables

percentage_of_students